Lessons learned from the first edition of a large second-year course in software construction

Practice paper about philosophy and implementation of CS-214 Software Construction at SEFI Annual Conference 2024.

Authors: Shardul Chiplunkar[1] and Clément Pit-Claudel, EPFL, Lausanne, Switzerland

Conference Key Areas: Teaching technical knowledge in and across engineering disciplines

Keywords: functional programming, software engineering, correctness

© 2024 SEFI CC BY-NC-SA, reproduced here under license. PDF version is authoritative.

This paper lays out a case study of Software Construction, a new course in the undergraduate computer and communication sciences curriculum at EPFL. We hope to share some generalizable lessons we learned from running its first edition in Fall 2023. The course targets three core concepts—functional programming, real-world engineering, and correctness—and its design is informed by four principles—students should learn by doing, assignments should be self-motivating, knowledge should be built incrementally, and students should see progress over time. We illustrate how we realized these concepts and principles with practical examples of course materials and teaching methods that we hope will serve as useful inspiration to others teaching similar courses. Lastly, we present some encouraging preliminary data about course outcomes and share our speculations about experiments for its future evolution.

Introduction

Fall 2023 saw the first iteration of a new core course in the undergraduate computer and communication sciences curriculum at EPFL: Software Construction (CS-214). This practice paper describes the overarching course philosophy and how it was realized in course materials and teaching methods, particularly in their more unconventional and experimental aspects. We hope that others teaching similar courses will find it a useful case study.[2]

We begin with some background about how the course is situated within the curriculum (§ 2). Next, we lay out the three core concepts threaded throughout the course: functional programming, real-world engineering, and correctness (§ 3). We also describe a few informal pedagogical principles we tried to follow (§ 4). These concepts and principles are illustrated by a collection of practical examples, such as the design of an assignment or a lecture (§ 5). We present some preliminary data about course outcomes (§ 6) and conclude by speculating about possible experiments for future iterations (§ 7).

Volumes have been written about programming and software engineering education; we do not aim or even attempt to survey the literature, rigorously evaluate its claims, or make novel research contributions. We merely present one possible instantiation of a set of coherent ideas.

Background

Academic year 2023–24 was the first in which a complete redesign of the Computer and Communication Sciences (IC) undergraduate curriculum came into force. Among other objectives, the redesign consolidated some courses in response to complaints about fragmentation and high context-switching burden. Table 1 summarizes relevant parts of the new curriculum [EPFL2024]. The new Software Construction incorporates elements of the three struck-out, discontinued courses. Between the two IC Bachelor’s programs, our course is mandatory for Computer Science and optional for Communication Systems; our total enrolment in Fall 2023 was 383.

Year |

Course |

|---|---|

1 |

Introduction to Programming and Practice of Object-oriented Programming (Java) |

Advanced Information, Computation, and Communication I, II (discrete mathematics and information theory) |

|

Fundamentals of Digital Systems (digital design and architecture) |

|

general education requirements in linear algebra, calculus, physics (mechanics), and the humanities |

|

2 |

Software Construction |

Functional Programming and Parallelism and Concurrency (both Scala) |

|

general education requirements, electrical engineering |

|

3 |

The Software Enterprise – From Ideas To Products (heavyweight project-based software engineering course) |

Software Engineering |

|

algorithms, compilers, parallelism and concurrency, and other computer and communication sciences electives |

Note that the broad base of the first year lets us assume basic technical knowledge of all our students and, say, create assignments to implement a physics simulation or a compression algorithm. Further, we aim to prepare students for the courses that follow it—and for computing-related careers in general—by developing their core competencies in functional programming, real-world engineering, and correctness, giving them the confidence to build small but real software. We leave it to Software Enterprise to teach project management, collaboration, software architecture, and modern DevOps practices [Pirelli2024].

Core concepts

The three core concepts that Software Construction targets are functional programming λ, real-world engineering 🛠️, and correctness ✔️. We will use these symbols to tag the examples in § 5 “Illustrative practical examples” with the concepts relevant to each.

We teach functional programming λ, which we feel especially makes sense at EPFL for two reasons. First, EPFL is the birthplace and home of Scala, a programming language whose central tenet is to unify functional and object-oriented programming [OderskyEtAl2021]. Scala MOOCs and other educational materials have been developed at EPFL for over a decade. As Scala runs on the JVM with a Java-like object model, and as our students come in with two semesters of object-oriented Java programming experience, adding in functional programming with Scala next is a natural choice. The other reason we believe functional programming works well at this point in the EPFL IC curriculum is its conceptual connections to algebra and discrete mathematics. Our undergraduates build a substantial foundation in mathematics in their first year (as outlined in § 2 “Background”) which lets us explain functional programming in terms of mathematical functions, rewriting, substitution, and so on.

Although our course is about building software in the small, we believe that two aspects of real-world engineering 🛠️ are still relevant. One is common software engineering infrastructure, such as command-line interfaces, build tools, networks and filesystems, and version control. For instance, we teach uses of Git that students wouldn’t otherwise self-learn online, such as investigating code provenance and collaborating via patches.[3] The other aspect is “external” libraries or frameworks that someone else (often the course staff) wrote, that the student is not expected to read or understand, but only interact with through an API; or students’ own past work, that they can build upon with skills from intervening weeks. We hope to build students’ confidence as they see themselves improve and encourage them to write code for humans as well as computers, including their collaborators and their future selves.

Correctness✔️ is our third core concept, referring to techniques to specify the absence of, prevent, catch, diagnose, and fix bugs. Diagnosing faults and troubleshooting is essential to any engineering discipline; debugging is the software counterpart, embodying a general scientific skill, viz., the scientific method [Agans2002], [Zeller2009], [Regehr2010]. Yet, it is seldom explicitly taught, and students generally lack competence in reasoning about (possibly faulty) programs [McCauleyEtAl2008], [FitzgeraldEtAl2008], [LiEtAl2019]. We train students to systematically make observations about code, determine expected behaviors, make hypotheses for explaining discrepancies, and test them out with controlled experiments. Complementarily, they write automated regression tests to replicate and guard against identified bugs, documentation to record assumptions and expectations, and pre-/post-conditions to monitor them at run time. We later introduce a more formal notion of specifications and program correctness proofs, some checked by computer.

These are instances of more general engineering skills in collaboration and version control that powerful tools like Git give us a golden opportunity to teach in the context of software [Wayne2021].

Pedagogical principles

This section describes some principles that inform how we teach the core concepts articulated above. Again, we tag each one with a symbol for later reference in § 5 “Illustrative practical examples”.

First, students should learn by doing👩🔬. Right from the first week of the course until the final exam, students should build real, albeit small, pieces of software. Every topic should have a large collection of ungraded exercises (pencil-and-paper or short programming problems) of varying difficulties and should figure in a graded lab (programming assignment). Students should have plenty of opportunities to receive help as they work on these assignments to maintain confidence. “Active learning” of this form has been shown to improve student performance and reduce disparities in learning outcomes [FreemanEtAl2014], [TheobaldEtAl2020].

A complementary principle is that assignments should be self-motivating🌟. A student should never have to wonder why they are being made to do a particular assignment, or whether a concept or skill will ever be relevant outside of class. Rather, each assignment should be fun in its own right, or useful for a realistic application (as opposed to contrived or toy applications), or ideally both. Labs should be self-contained pieces of software with visualizations and UIs whenever possible.

Another main principle is that knowledge should be built incrementally📚. This is in contrast to, say, introducing sets of new concepts in discrete units or modules, whereof the interconnections are addressed only post hoc, if at all. An incremental approach lets us build real software from scratch right from the start, with plenty of room to improve that we progressively explore. It also lets us clearly define what we expect students to know prior to the first week and not risk assuming further implicit background when switching between later topics. Anecdotally, this may be more inclusive of students with varied backgrounds—we were happy to find students among the top 5% who only started programming after they came to EPFL.

Lastly, as a corollary to the above, students should see progress over time📈: the progression of incrementally built knowledge should be brought to students’ attention. Course materials, including assignments, should make explicit connections to previous ones. Students should have chances to revisit their own past work in situ and discover for themselves the improvement in their abilities over time, which we believe bolsters student confidence and reinforces the value of what is being taught.

Illustrative practical examples

λ |

🛠️ |

✔️ |

👩🔬 |

🌟 |

📚 |

📈 |

|

|---|---|---|---|---|---|---|---|

|

◼ |

◼ |

◼ |

◼ |

|||

HOFs index cards |

◼ |

◼ |

◼ |

◼ |

|||

|

◼ |

◼ |

◼ |

||||

Incremental abstraction |

◼ |

◼ |

|||||

Music synthesis |

◼ |

◼ |

|||||

Computer proofs |

◼ |

◼ |

◼ |

||||

Interview training |

◼ |

◼ |

|||||

Weekly debriefs |

◼ |

Below, we illustrate the core concepts and pedagogical principles described in the two previous sections with some concrete instantiations from Fall 2023. Each is tagged as follows:

functional programmingλ,

real-world engineering🛠️,

correctness✔️;

learning by doing👩🔬,

self-motivating assignments🌟,

incremental knowledge📚,

visible progress📈.

- Lab assignment: the Unix

findutility The first lab assignment👩🔬 is to write a highly simplified version of the Unix

findutility that locates files in a directory tree matching criteria like ‘last modified before Jan 1’. The value of such a tool is self-evident🌟, and upon completion, students are able to run their implementations to search their own computers. The key functional programmingλ concept exercised is structural recursion. The directory tree is inherently a recursive structure, and even for students who may be scared of or uncomfortable with recursion, a recursive solution is far more natural than an iterative one—as opposed to traditional unary natural number recursion exercises like the factorial function (why not just iterate?), or trickier recursions like the Euclidean GCD algorithm [Krishnamurthi2024]. In addition, right from this first lab, we introduce realistic engineering ideas🛠️ like interacting with the filesystem using a library.- Index cards exercise for higher-order functions (HOFs)

An early exercise involves a set of index cards with the definitions of simple recursive functions on integer lists, like

containsorproduct. Working in small groups, students are first asked to draw similarities between purposefully chosen pairs (e.g.collectEvenandremoveZeroes), annotating the cards if they want to, and then to physically group the cards by extending the patterns they find (e.g. adding inintersection). Next, they are tasked with generalizing these patterns by writing HOFsλ. In the process, or by comparing with others, they may realize that some patterns are just special cases of others, and we expect them to eventually arrive at some versions ofmap,filter, andfoldRight👩🔬📈. We conclude by hinting that all the functions can actually be written as folds, and foreshadowing polymorphism (allowing for the same functions on strings) and generalized folds (e.g. on trees)📚.- Lab callback:

findwith higher-order functions (HOFs) Soon after the above, students revisit their lab submission to realize📈 that large parts of their

findfunctions—findBySizeGe,findByName, etc.—were redundant. They rewritefindas a HOF that takes a Boolean predicate argument👩🔬. The utility of this refactoring that shrinks code by a factor of five is self-evident🌟. Similar callbacks throughout the semester build on past work with newly learned skills in clear increments.- Incremental abstraction and correctness

Abstracting over predicates to turn

findinto a HOF is typical of the incremental progression of ideas in the course. After students definemapIntover (monomorphic) lists of integers andmapStringfor strings, we teach them variance and polymorphism to abstract over types; or after noting how functions likemapandflatMapwork similarly for lists, option types, andFutures, we introduce the abstraction of monads📚. Incremental program transformations elegantly lend themselves to incremental reasoning about correctness✔️. For instance, in a single exercise set, we have students (i) observe that some recursive functions perform redundant computation on subproblems; (ii) make careful, encapsulated use of mutable state to fix the issue with memoization; (iii) generalize to an arbitrary function memoizer; and (iv) further observe that it may be sufficient for the memo to record only some subproblems, i.e. convert the function into a dynamic programming problem.- Signal processing and music synthesis

A compelling real-world application of lazy evaluation in functional style is music synthesis🌟. Starting from just the Java Sound API,[4] an hour-long lecture builds combinators for low-level processing like resampling, higher-level effects like echoing, amplitude envelopes, sequencing based on musical scores, and so on, to synthesize realistic snippets🛠️. The lecture is presented in literate style using Alectryon [Pit-Claudel2020]. Using Scala lazy lists bypasses problems with buffer boundary conditions, single-use streams, and variable source-driven sample rates, while letting us build an elegant, compositional library.

- Computer-checked proofs

In some early exercises, students write inductive proofs of functional properties (e.g.

appendis equivalent toconcatwith a singleton) as a series of rewrite rules✔️. This sets the stage for introducing computer-checked proofs based on the Lisa proof assistant📚 [GuilloudGambhirKunčak2023]. Students experience first-hand how a computer can make checking proofs less tedious and error-prone and increase confidence in correctness🌟.- Coding interview training

In the final week, a lecture about coding interviews includes a mock interview where an instructor is given a programming puzzle and demonstrates how to approach the problem, think out loud, make progress in limited time, and answer questions about generalization and correctness🌟🛠️.

- Weekly debriefs

Every week throughout the semester, we post a debrief on the course website with a summary of student questions that may be of broader interest, tips for exercises and labs, and additional relevant material. The content is informed by periodic class polls and individual student interactions to let students learn from their peers and witness their collective progress📈.

Supporting logistics

Below are some logistical details that let us better fulfill our educational objectives.

- Computerized final exam

The final bears special mention due to the fairness and logistics challenges posed by our large class size and the recent widespread availability of code-generating AI tools. The exam is a set of mini-labs, with no hidden tests, graded at scale with the same homegrown autograder infrastructure as for lab assignments. We conduct the exam on custom virtual machines in thin-client computer labs so that we can test students’ abilities in isolation from external help. Students do not have Internet access but do have access to course materials (including any published solutions), their own submitted work, and a standard Scala development setup. They are given opportunities to get comfortable with this environment beforehand but their actions are not persisted.

- Student assistant (SA) training workshop

We run a workshop to help our SAs better help students during office hours in the spirit of the course’s values. We have SAs read and discuss a short handout about professional and respectful conduct, how to enable active learning rather than simply answering questions, Pólya’s problem-solving method [Pólya1945], and other concordant guidelines, encouraging them to reflect on their own teaching and learning experiences. They also watch and respond to mock office-hours interactions of varying helpfulness. Finally, we recommend that they take a complementary SA training offered by CAPE.

Seamlessly interoperable, like all Java libraries, with JVM-based Scala. We are exploring the possibility of using Scala.js and the Web Audio API in a browser instead for more interactivity.

Preliminary data

Fall 2023’s Software Construction was successful overall, despite being offered for the first time. Some quantitative metrics about the course were very encouraging: in the end-of-semester student course evaluations conducted by CAPE, the EPFL Teaching Support Centre, with a 25% response rate, 96% of respondents agreed or strongly agreed with the statement “Overall, I think this course is good.” on a 4-point Likert scale. This was the highest rating among all large courses in IC.

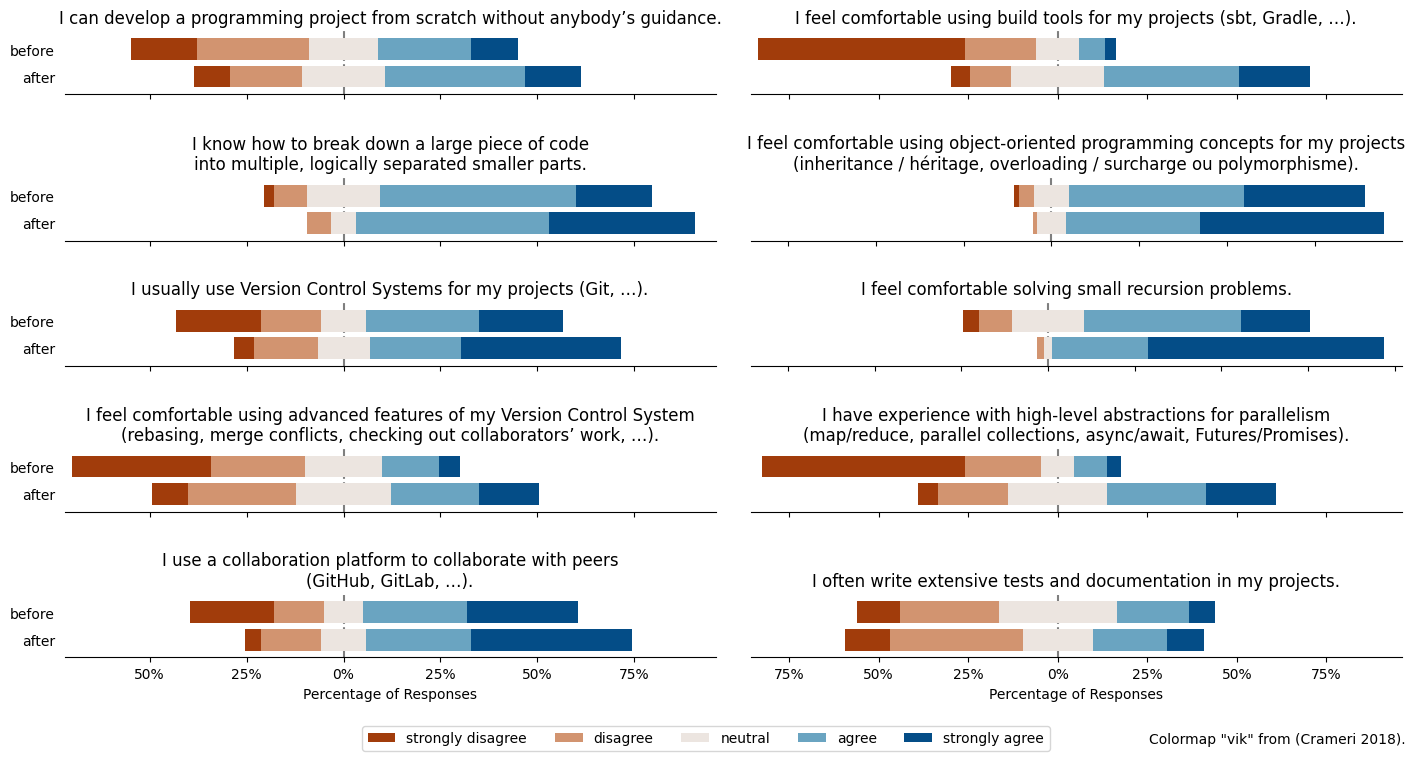

Another set of metrics was designed to evaluate the course’s high-level objective of “developing [students’] core competencies […] giving them the confidence to build small but real software” (from § 2 “Background”). We asked students whether they “feel comfortable”, “know how to”, or “often” use the techniques and tools we taught, on a 5-point Likert scale. The questions were presented before the first week (alongside other questions about students’ background) and after the final exam as part of CAPE’s course evaluation survey (alongside their regular questions common to all courses). All data was anonymized and only aggregate, non-identifiable statistics are reported in Figure 1. Students broadly felt more confident along all axes. While our course was not our students’ only activity during the semester, none of their concurrent courses addressed our topics[5], and second-years are not yet eligible to participate in research projects.

Respondents’ answers to Likert-scale questions about skills Software Construction aims to develop, in a questionnaire posted on our LMS before the first week of classes (“before”, n = 283), and in a questionnaire administered by CAPE after the final exam (“after”, n = 97). Our class size was 383. (Colormap “vik” from [Crameri2018].)

Computer Architecture teaches some hardware programming; Numerical Methods for Visual Computing and ML uses Python as a means to use domain-specific libraries, and Signal Processing is largely theoretical with some Python assignments.

Looking ahead

Below, we outline some experiments that we didn’t have the time for or hadn’t thought of in Fall 2023. Grading is a common theme: our experience indicates that many students don’t do assignments if they aren’t graded, and don’t feel that they learned a topic if it wasn’t specifically targeted in grading.

- Develop a capstone group project

In Fall 2023, we laid the foundations in the form of a lab assignment for what we believe can grow into a fulfilling, open-ended capstone group project for the course: server-client webapps. Following a state-machine–based architecture, the webapp lab has students build two simple games, maintaining a single piece of server-side mutable state of which clients statelessly render projections. The framework is easy to extend to more complex applications, such as a chat room, an authentication meta-application, or classic board and card games. We envision these extensions being developed as group projects that students can showcase on a shared server at the end of the course. We plan to consult prior work on software capstone projects to make ours most effective [TenhunenEtAl2023], and in particular, for students not yet ready for full-blown project management techniques [KuhrmannMünch2018], [MarquesEtAl2017].

- Evaluate code comprehension at scale

Software engineering relies as much on understanding and reasoning about code as on writing it, and recent advances in AI will only make the former more relevant. We would like our grading strategies to reflect this. We already know how to automatically grade correctness judgements for small programs (MCQs on a paper exam), pre-/post-condition specifications (run-time monitoring), and some formal proofs of functional properties (Lisa). Beyond that, we could evaluate test writing by running students’ tests for a lab assignment on a provided buggy implementation and on other students’ submissions, and awarding points based on number of bugs identified, number uniquely identified across peers, code coverage, etc., learning from prior research on peer evaluation of software [GroeneveldVennekensAerts2020]. Automated execution visualization tools in the style of PythonTutor [Guo2013] may also be relevant. However, important skills we don’t yet know how to evaluate automatically include debugging, documentation writing, program structure (e.g. types, class hierarchies), and code style.

- Provide in-person feedback and evaluation

Code comprehension and organization may be better suited to individual, human judgment. We find that one-on-one interactions with staff can be far more effective than other forms of evaluation and feedback—and secondarily, than other forms of detecting cheating. Back-of-the-envelope estimates suggest that such “checkoffs” may be feasible to scale, for, say, 1 out of every 2 weekly labs, if SAs have appropriate training.

- Teach the teachers: SA training

SAs play a key role in our vision for the course. Ideally, they would be able to judge a student’s understanding of code, give design and style feedback, help fix bugs by teaching debugging rather than debugging themselves, answer questions without giving away answers, track and communicate common difficulties with other staff, and participate in developing new materials. (This would also take load off of time-constrained PhD students and let them assume a more supervisory role.) Being an SA is a challenging job—one that we hope to explicitly train them for, and one that we think is a worthwhile outcome of the course in its own right, whether or not they return as staff in subsequent years.

- Highlight real-world engineering in labs

Despite lab assignments having been the primary training ground for debugging, version control, working with libraries and other people’s code, program design, and code evolution, these topics were not tested explicitly. Students consequently felt that labs didn’t sufficiently exercise real-world engineering. We aim to address this discrepancy by calling out engineering topics where exercised in each lab and grading them when possible. For instance, for Git exercises, students could submit Git bundles for automatic grading. Other courses situated similarly to ours may have useful lessons, like [McBurneyMurphy2021].

- Reuse classic exercises

Learning by doing is a principle shared by our colleagues in mathematics and physics, whose textbooks and course materials include dozens of small cut-and-dried exercises per topic. Students are typically assigned a few key exercises and are free to do more. We believe we can likewise supplement problems we design with others of exceptional quality from classic, highly acclaimed texts like The Art of Computer Programming [Knuth2022] and Structure and Interpretation of Computer Programs [AbelsonSussman1996].

Conclusion

Software Construction served—and will continue to serve—as a testing ground for ideas about programming and software engineering education. In this first iteration, we identified a coherent set of ideas that work well together, targeting the three core concepts of functional programming, real-world engineering, and correctness (§ 3), and following some informal pedagogical principles (§ 4). We share some concrete illustrative examples in this paper with the hope that they will be useful for others trying to adapt our approach to their own contexts (§ 5). After presenting some preliminary quantitative data about course outcomes (§ 6), we identify avenues for future experimentation (§ 7). We hope the lessons we learned that we share in this paper will prove helpful to other educators just as they will help us iterate on the course in the future.

Acknowledgments

In Fall 2023, Software Construction was co-taught by the second author and Profs. Viktor Kunčak and Martin Odersky. Many materials and ideas were adapted from Functional Programming and Parallelism and Concurrency, taught in the past at EPFL by Odersky and Kunčak, and MOOCs about Scala and programming, developed by Odersky and others at the Scala Center. Software Construction teaching assistants along with the first author were (listed alphabetically) Yann Bouquet, Matthieu Bovel, Samuel Chassot, Sankalp Gambhir, Yawen Guan, Anna Herlihy, Yichen Xu, and Yaoyu Zhao. Lastly, the course would not have been possible without our great team of Bachelor’s and Master’s student assistants.

We thank Fabien Salvi, our system administrator, for technical support. We also thank Joelyn de Lima and the rest of the CAPE team for productive discussions about the course and this paper.

References

Abelson, Harold, and Gerald J. Sussman. 1996. Structure and Interpretation of Computer Programs. 2. Cambridge, MA: MIT Press.

Agans, David J. 2002. Debugging. USA: AMACOM.

Crameri, Fabio. 2018. “Scientific colour maps”. Zenodo. doi:10.5281/zenodo.1243862.

EPFL. 2024. “Study plans and regulations”. Study Management - EPFL. Accessed April 8, 2024. https://www.epfl.ch/education/studies/en/rules-and-procedures/study_plans/.

Fitzgerald, Sue, Gary Lewandowski, Renée McCauley, Laurie Murphy, Beth Simon, Lynda Thomas, and Carol Zander. 2008. “Debugging: Finding, Fixing and Flailing, a Multi-Institutional Study of Novice Debuggers”. Computer Science Education (Routledge) 18: 93–116. doi:10.1080/08993400802114508.

Freeman, Scott, Sarah L. Eddy, Miles McDonough, Michelle K. Smith, Nnadozie Okoroafor, Hannah Jordt, and Mary Pat Wenderoth. 2014. “Active Learning Increases Student Performance in Science, Engineering, and Mathematics”. Proceedings of the National Academy of Sciences 111: 8410–8415. doi:10.1073/pnas.1319030111.

Groeneveld, Wouter, Joost Vennekens, and Kris Aerts. 2020. “Engaging Software Engineering Students in Grading: The Effects of Peer Assessment on Self-Evaluation, Motication, and Study Time”. Edited by Jan van der Veen, Natascha van Hattum-Janssen, Hannu-Matti Järvinen, Tinne de Laet and Ineke ten Dam. Proceedings of the SEFI 48th Annual Conference 788–798.

Guilloud, Simon, Sankalp Gambhir, and Viktor Kunčak. 2023. “LISA - a Modern Proof System”. Edited by Adam Naumowicz and René Thiemann. 14th International Conference on Interactive Theorem Proving (ITP 2023). Dagstuhl, Germany: Schloss Dagstuhl – Leibniz-Zentrum für Informatik. 17:1–17:19. doi:10.4230/LIPIcs.ITP.2023.17.

Guo, Philip J. 2013. “Online Python Tutor: Embeddable Web-Based Program Visualization for CS Education”. Proceedings of the 44th ACM Technical Symposium on Computer Science Education. New York, NY, USA: Association for Computing Machinery. 579–584. doi:10.1145/2445196.2445368.

Knuth, Donald E. 2022. The Art of Computer Programming: Volumes 1–4B. Addison-Wesley Professional.

Krishnamurthi, Shriram. 2024. How Not to Teach Recursion. Accessed April 7, 2024. https://parentheticallyspeaking.org/articles/how-not-to-teach-recursion/.

Kuhrmann, Marco, and Jürgen Münch. 2018. “Enhancing Software Engineering Education Through Experimentation: An Experience Report”. 2018 IEEE International Conference on Engineering, Technology and Innovation (ICE/ITMC). 1–9. doi:10.1109/ICE.2018.8436357.

Li, Chen, Emily Chan, Paul Denny, Andrew Luxton-Reilly, and Ewan Tempero. 2019. “Towards a Framework for Teaching Debugging”. Proceedings of the Twenty-First Australasian Computing Education Conference. New York, NY, USA: Association for Computing Machinery. 79–86. doi:10.1145/3286960.3286970.

Marques, Maíra, Sergio F. Ochoa, María Cecilia Bastarrica, and Francisco J. Gutierrez. 2017. “Enhancing the Student Learning Experience in Software Engineering Project Courses”. IEEE Transactions on Education 61: 63–73. doi:10.1109/TE.2017.2742989.

McBurney, Paul W., and Christian Murphy. 2021. “Experience of Teaching a Course on Software Engineering Principles Without a Project”. Proceedings of the 52nd ACM Technical Symposium on Computer Science Education. New York, NY, USA: Association for Computing Machinery. 122–128. doi:10.1145/3408877.3432550.

McCauley, Renée, Sue Fitzgerald, Gary Lewandowski, Laurie Murphy, Beth Simon, Lynda Thomas, and Carol Zander. 2008. “Debugging: A Review of the Literature from an Educational Perspective”. Computer Science Education (Routledge) 18: 67–92. doi:10.1080/08993400802114581.

Odersky, Martin, Lex Spoon, Bill Venners, and Frank Sommers. 2021. Programming in Scala. 5th. Walnut Creek, CA, USA: Artima Press.

Pirelli, Solal. 2024. “Scalable Teaching of Software Engineering Theory and Practice: An Experience Report”. Proceedings of the 46th International Conference on Software Engineering: Software Engineering Education and Training. New York, NY, USA: Association for Computing Machinery. 286–296. doi:10.1145/3639474.3640053.

Pit-Claudel, Clément. 2020. “Untangling Mechanized Proofs”. Proceedings of the 13th ACM SIGPLAN International Conference on Software Language Engineering. Virtual USA: ACM. 155–174. doi:10.1145/3426425.3426940.

Pólya, George. 1945. How to Solve It. Princeton University Press.

Regehr, John. 2010. How to Debug. July 4. Accessed April 7, 2024. https://blog.regehr.org/archives/199.

Tenhunen, Saara, Tomi Männistö, Matti Luukkainen, and Petri Ihantola. 2023. “A Systematic Literature Review of Capstone Courses in Software Engineering”. Information and Software Technology 159: 107191. doi:10.1016/j.infsof.2023.107191.

Theobald, Elli J., Mariah J. Hill, Elisa Tran, Sweta Agrawal, E. Nicole Arroyo, Shawn Behling, Nyasha Chambwe, et al. 2020. “Active Learning Narrows Achievement Gaps for Underrepresented Students in Undergraduate Science, Technology, Engineering, and Math”. Proceedings of the National Academy of Sciences 117: 6476–6483. doi:10.1073/pnas.1916903117.

Wayne, Hillel. 2021. “What engineering can teach (and learn) from us”. Hillel Wayne's blog. January 22. Accessed April 1, 2024. https://www.hillelwayne.com/post/what-we-can-learn/.

Zeller, Andreas. 2009. Why Programs Fail: A Guide to Systematic Debugging. 2. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc.